If you work on large language models, you already think in vector spaces. Words, sentences, documents, and activations are represented as points in a high-dimensional vector space where cosine similarity stands in for “meaning.” The Geometric Whorfian Hypothesis (GWH) starts from that familiar picture and makes a stronger claim: LLM embedding spaces should be treated as Riemannian, language-conditioned models of human conceptual geometry, not simply Euclidean containers for co-occurrence statistics. Instead of seeing embeddings as a convenient representation of statistical data, we should treat them as our first large-scale, manipulable approximations to how human minds carve up meaning.

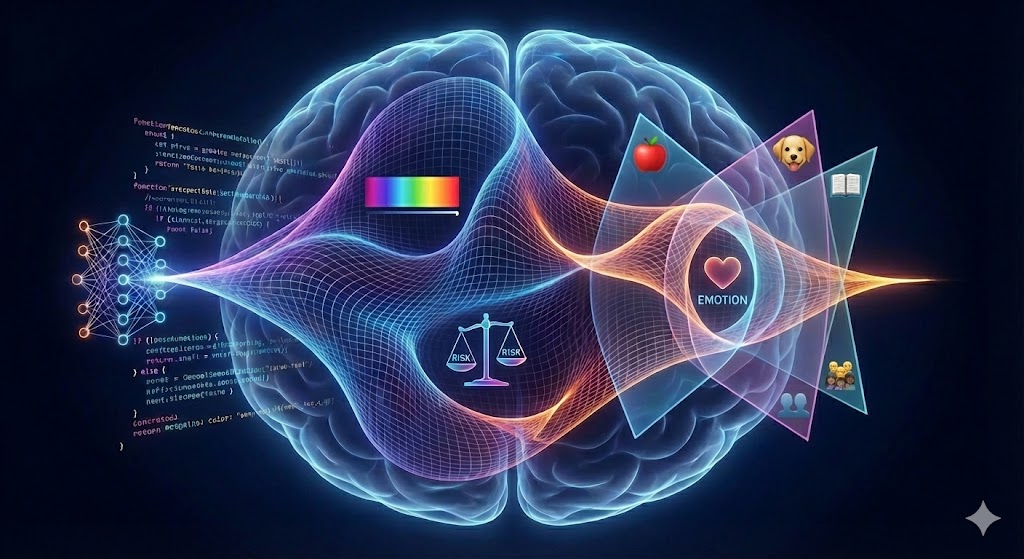

GWH fuses three strands. First, modern linguistic relativity: languages and cultures shift how people categorize, infer, and remember. This does not happen by giving them “different worlds” in the classic Whorfian sense, but by changing probabilistic inference, category boundaries, and similarity structure. Second, the conceptual spaces framework in cognitive science: concepts are regions in a structured space spanned by interpretable dimensions such as hue, brightness, pitch, or risk, where similarity is literally distance and prototypes sit near the centers of those regions. Third, the embedding machinery of contemporary NLP: learned high-dimensional spaces in which semantic similarity behaves like distance and linear structure supports analogies and control. GWH treats LLM embeddings as language-specific and context-specific geometries over a shared manifold of meanings, constrained and evaluated against human cognitive data.

From this perspective, linguistic relativity becomes explicitly geometric. The categories your language gives you, for example color terms, spatial frames, and number systems, do not merely label a conceptual space. They can actually warp it. Perceptual color space is approximately continuous and low-dimensional, yet languages impose different boundaries and distort perceived distances. Speakers with a single term for blue and green cluster colors differently from speakers who sharply distinguish “blue” from “green.” In GWH, that is not a loose metaphor. The underlying manifold is the same, but languages induce different metrics and partitions, so distances, neighborhoods, and geodesics through conceptual space become language dependent.

Riemannian geometry enters when you take factors such as context and emotion seriously. Semantic distance in human cognition is not uniform. Under financial anxiety, “risk” is pulled toward “loss” and away from “opportunity.” For a trader, “risk” is near “volatility” and “leverage.” For a clinician, it is near “symptoms” and “interventions.” Under moral threat, “contamination,” “impurity,” and “danger” can collapse into a tight cluster. In neutral reflection, they spread apart again. That looks like curvature: regions where the space is stretched, compressed, or bent by affect, expertise, and social framing. The manifold of possible meanings is shared, but emotional states, roles, and cultures induce different local geometries on it. In other words, they induce different metrics and curvatures. In LLM terms, context is not just “more tokens in the window.” It is a temporary modification of the metric on the semantic manifold that changes which directions are cheap to move in and which distinctions are sharpened or blurred.

For LLM research, this reframes several things. Similarity becomes explicitly metric conditioned, varying with language and context rather than being a single global cosine. Human cognitive data, for example similarity judgments, category boundaries, reaction times, and framing effects, become constraints on the learned metric rather than just downstream evaluation signals. Multilingual models stop being “one big space for all tokens” and become language-conditioned geometries over a shared manifold, with cross-lingual alignment defined as learning maps between differently curved spaces. Prompting, persona conditioning, and safety steering can be described as geometric operations: moving the current point, modifying the local metric, or constraining the allowed paths through the space.

The payoffs are concrete. For cross-lingual and cross-cultural alignment, you can explicitly map how concepts such as “harm,” “fairness,” or “consent” sit in differently curved spaces across languages, then design geometric transformations between them. For interpretability and safety, you can track how the neighborhoods around value-laden concepts shift under different prompts, user personas, or fine-tunes, and you can detect when adversarial prompting flattens important moral distinctions. For model design, you can encourage low-dimensional, interpretable submanifolds in domains such as color, space, or risk, and you can regularize the metric toward known psychophysical geometries instead of hoping they emerge from scale.

The GWH is a research program rather than a finished theory. Many of the implications will be seen as controversial, although the program is grounded in established and widely accepted work in cognitive linguistics, conceptual spaces, differential geometry, and recent AI research. The goal is to build tools that map “semantic ecologies,” probe and reshape LLM embedding spaces using human data as geometric supervision, and design interfaces where people can inspect how a model currently “sees” a domain and nudge that geometry toward healthier forms. The core thesis is straightforward: if language shapes thought, it does so by shaping the space in which thinking happens, and LLMs are our best current approximations to those spaces. GWH is about learning to read, compare, and carefully redesign that geometry so that human minds and machine models inhabit compatible, inspectable conceptual worlds. The internal models that represent both the external world and the observing and acting agent are plausible core components of consciousness in humans and other biological organisms, and the same style of internal modeling offers a route to analogous structures in artificial intelligences and non-organic life as well.